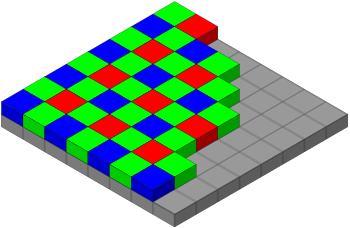

One issue I thought might be a problem is that the pixels on a camera don’t really each measure all 3 colours. Instead, they each measure one colour & the colours are then interpolated. This isn’t a problem if the object being photographed spans many pixels, but what if the object is a tiny bright dot, as in our situation.

An example photo of “What the ladybird heard”:

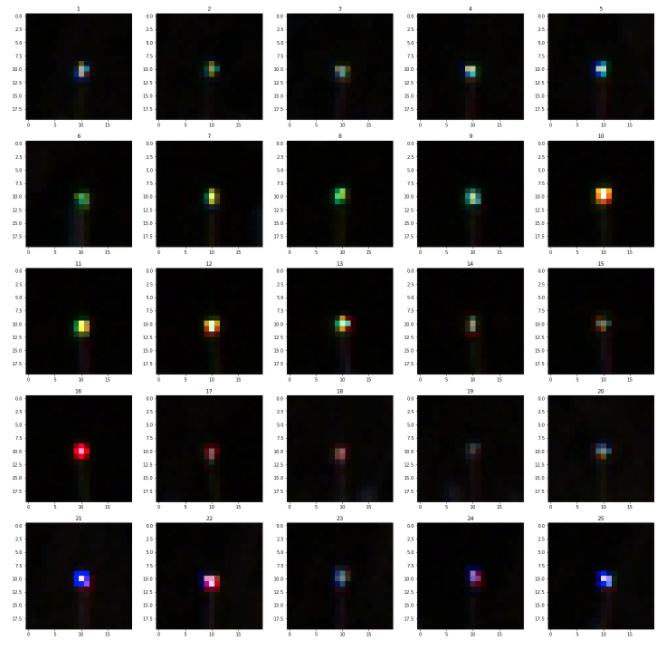

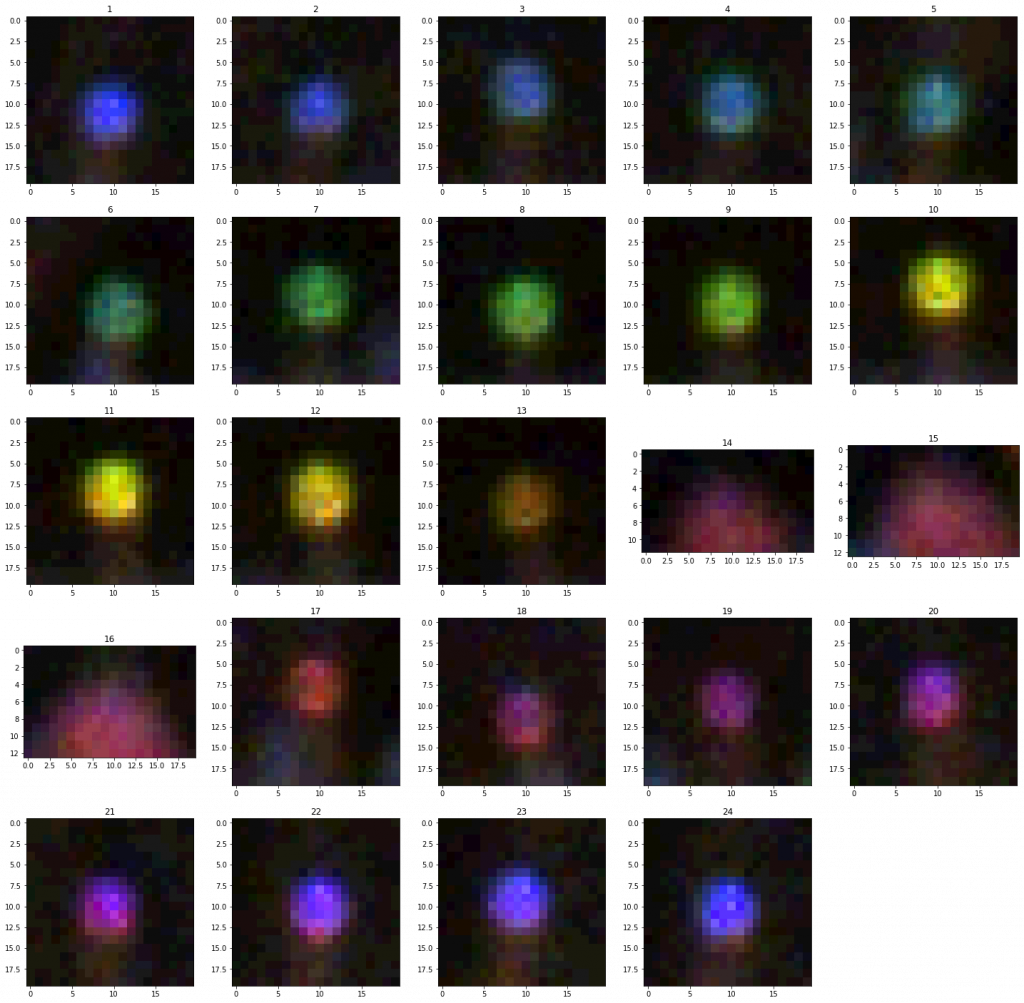

Sadly the problem of the filter seems to be impacting our bee-orientation/id experiment. Here I rotate a tag 360:

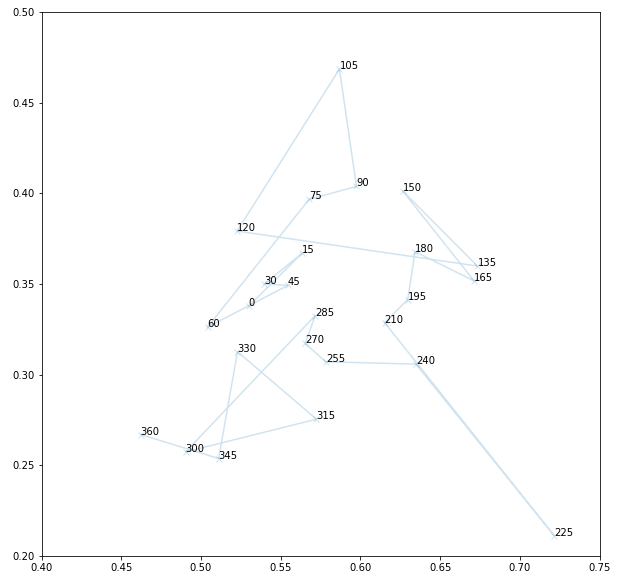

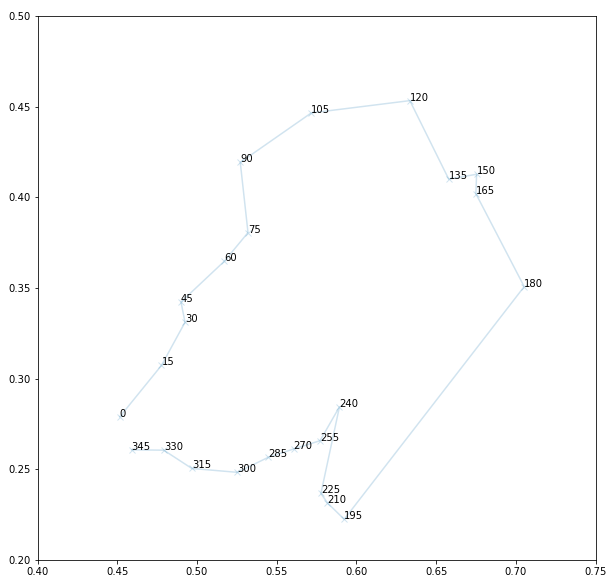

The result is less accurate predictions of orientation:

If we adjust the focus of the lens so the tag isn’t in focus, the colours are more reliable:

This leads to a more reliable prediction:

I think the plan now is to:

- Collect more data but download raw (without interpolation) – this also saves bandwidth from the camera.

- Look at fitting the PSF using this raw data.

- Maybe leave the camera just a little out of focus, to ensure all the colours are detected.