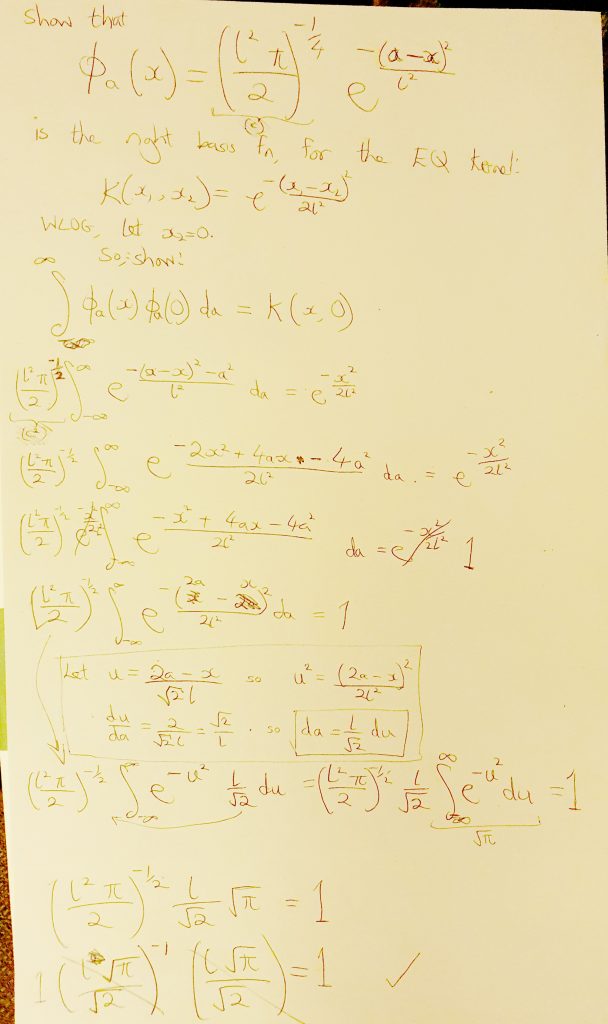

I understand that the EQ kernel (and other kernels) can be understood via the kernel trick as an infinite number of (appropriate) bases functions. I’ve not found the actual proof of this online (I’m sure it’s somewhere, but I clearly didn’t know what to search for [edit: Turns out some of it is in the covariance functions chapter in Gaussian Processes for Machine Learning]). It’s straightforward, but I wanted to see it, so I would know what constants etc I needed my bases to have (lengthscale and height).

Without loss of generality (hopefully) I’ve just considered the kernel evaluated between ![]() and

and ![]() . This should be fine as the EQ kernel is stationary.

. This should be fine as the EQ kernel is stationary.

So:

The EQ kernel: ![]()

We believe that an infinite number of Gaussian bases, ![]() will produce the EQ kernel.

will produce the EQ kernel.

For multiple dimensional inputs:

The EQ kernel: ![]()

We believe that an infinite number of Gaussian bases, ![]() will produce the EQ kernel.

will produce the EQ kernel.