Progress so far:

1) Automatic detection of the markers.

To make life easier in the long run I made posts that are hopefully visible from up to 50m away using the tracking system’s optics and are machine readable (see here). The tool finds the location/orientation of the markers in the image. It then uses the top and bottom of each to help with alignment.

2) Automatic registration of the cameras and markers. This was quite hard.

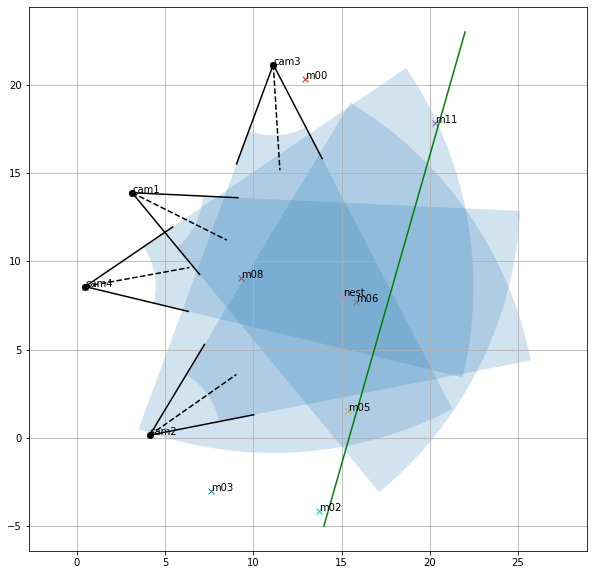

a) I’ve provided the distance between lots of pairs of landmarks and cameras (measured on the day), and approximate x,y coordinates for all of them. The tool then does a rough optimisation step to work out roughly where everything is (i.e. the x,y coordinates of all the objects are adjusted to make the measured distances match):

b) Once all the landmarks are located in an image from each of the cameras (see step 1), the orientation (yaw, pitch, roll), fov and position of the cameras and the markers is optimised to make their 3d locations ‘match’ the photos.

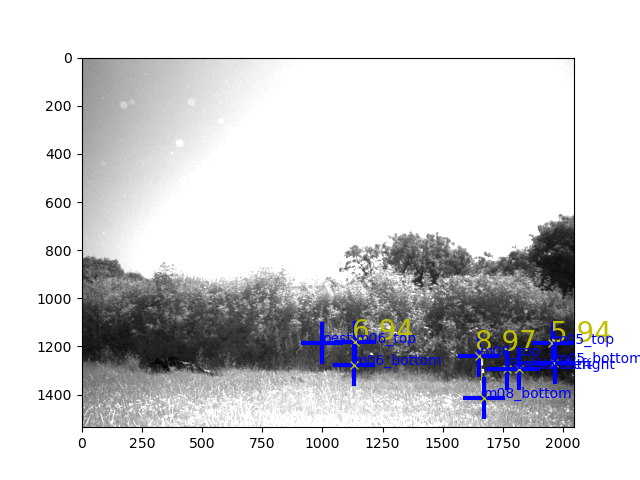

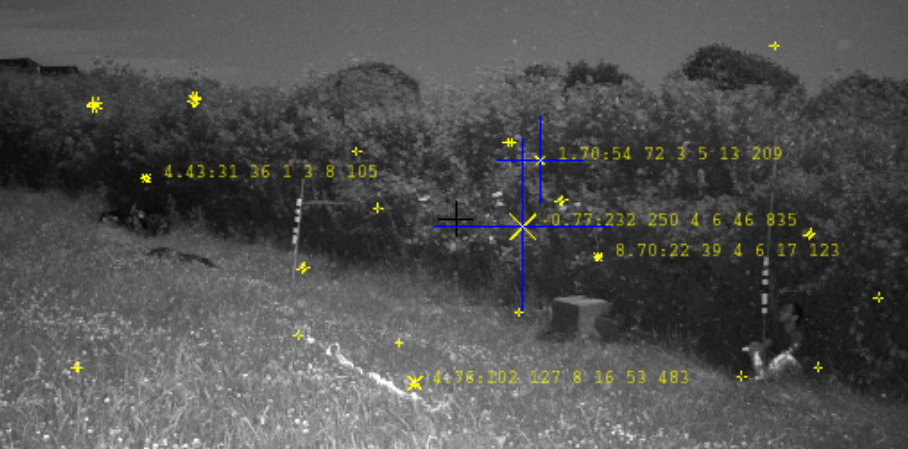

3) Detect Bee. We then run the standard tag detection algorithm for each camera, which produces image coordinates of possible detected bees. [edit: we then manually confirm each detection].

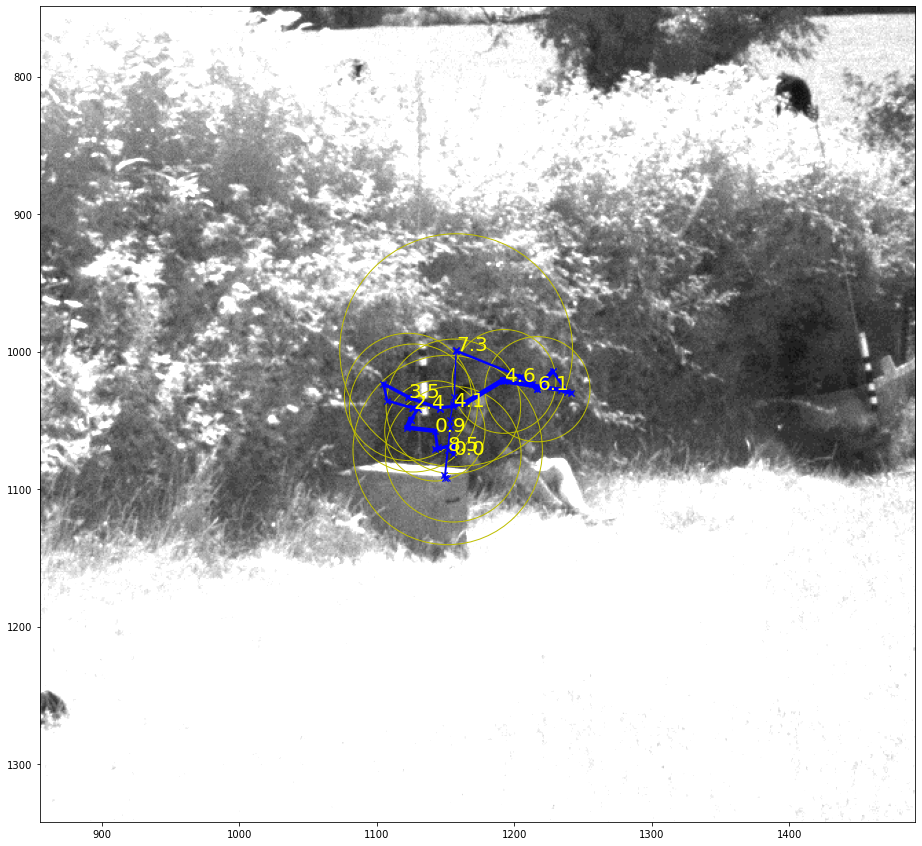

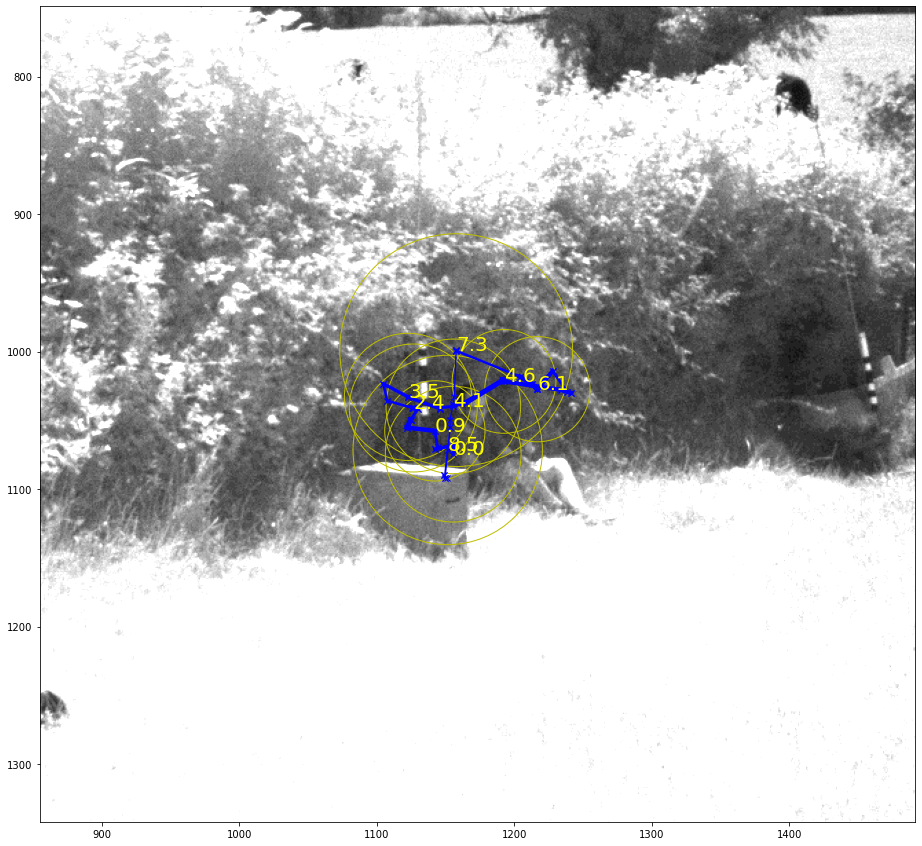

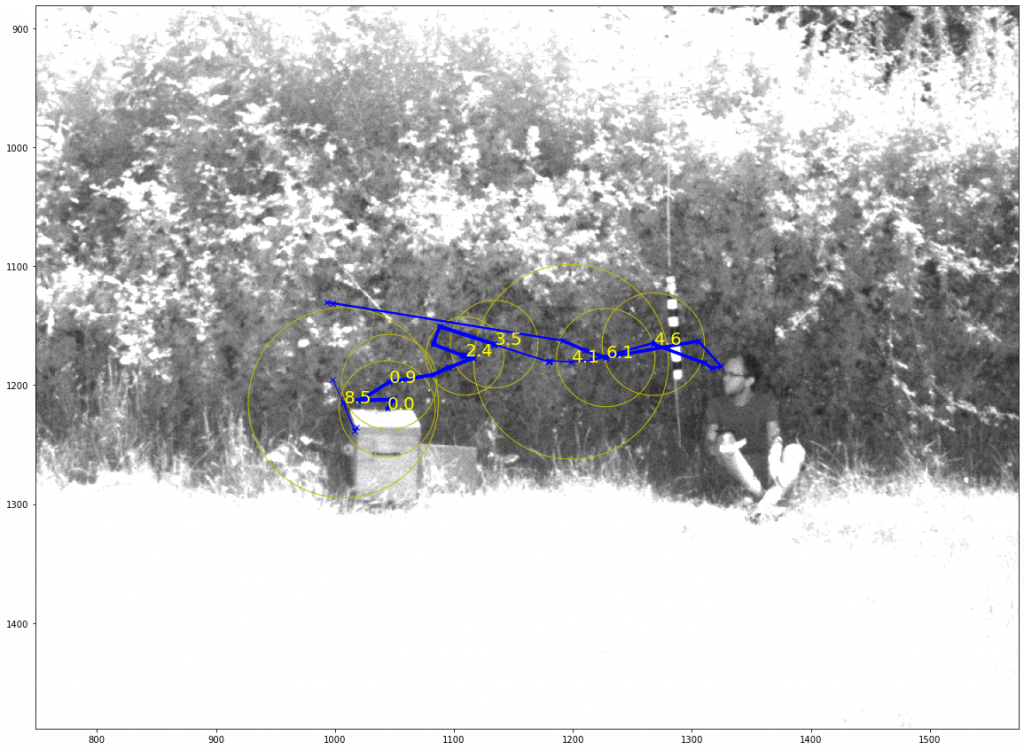

4) Compute 3d path. To get the 3d path, I’m using a particle filter/smoother [edit: later we use a Gaussian process, but the images here are from the PF] to model the path of the bee. This gives an estimated x,y,z and a distribution over space (which gives us an idea of its confidence). Using the camera configurations determined in step 2, and the coordinates from step 3: Each coordinate in step 3 equates to a line the bee might lie on in 3d space… I’ll skip the details now… the upshot is you end up with a trajectory in 3d. I’ve rendered the trajectory back onto the 4 camera images.

The trajectories above are 3d, projected back onto the photos.

The nest had been moved a few hours earlier, so we wouldn’t necessarily expect any learning flights really.

Looking at the photos carefully, I think the bee heads left along the hedge (in the images below (2d detection) the blue crosses are where the system thinks it’s found a bee, the rest are just debugging info – i.e. where it’s looked). The smoother had low confidence about where the bee was after ~7 seconds. If I went through and manually clicked on the ‘correct’ targets it would be able to reconstruct more of the path. Note the bee at the top of the hedge.

I’ve just run it on one flight so far (from the 20th at 13:58:00)!

After the first day at the field site, I got a bit nervous and moved the cameras a bit closer to the nest as I was worried the system wouldn’t see the bee. I think the bee is detected quite well, in retrospect, but the problem now is the cameras are slightly too close really! I should have had more faith in the system!